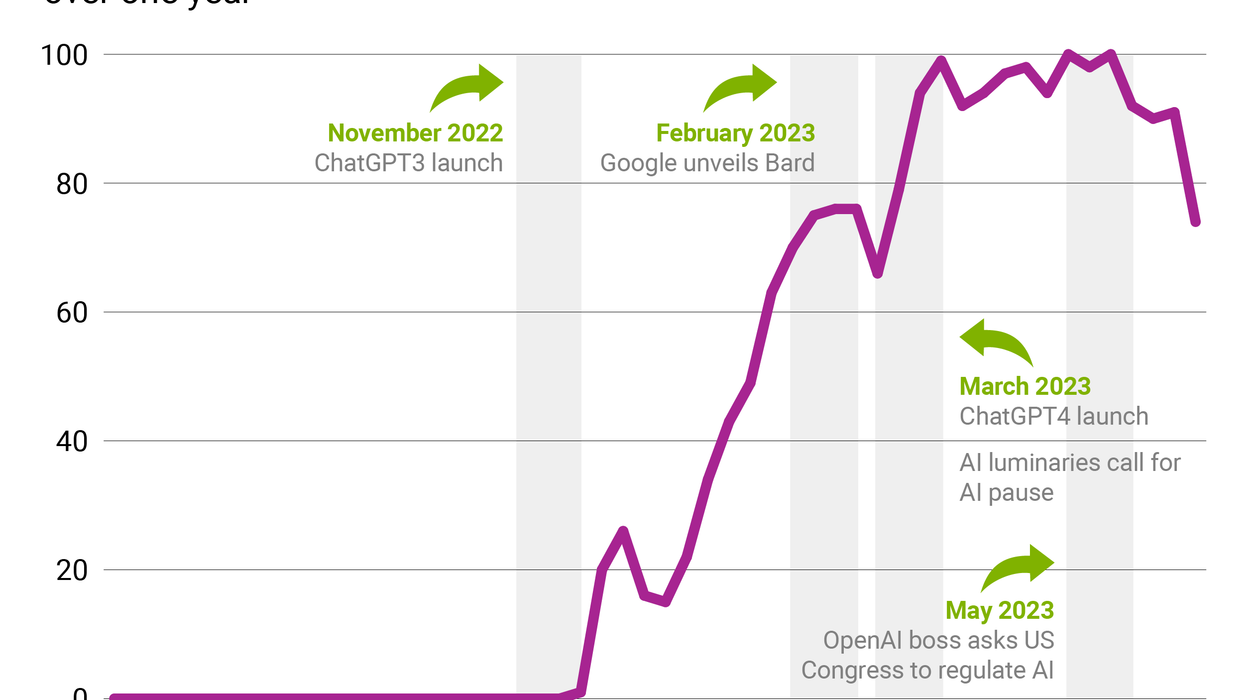

The emergence of AI has amplified the problem of disinformation that has proliferated since the 2016 US presidential election, and regulation is often touted as a way to address this worsening issue.

But even if governments do find a way to effectively regulate AI – and that’s a big if – that doesn’t address the widespread demand for conspiracy theories that confirm people’s established political and cultural biases.

Indeed, in this hyper-charged partisan environment, the desire to create and access this sort of content has only grown – and AI is about to supercharge it.

In some instances, it already is: The campaign of GOP presidential candidate and Florida Gov. Ron DeSantis recently shared AI-generated fake images of Donald Trump, his main rival, embracing Dr. Anthony Fauci, a divisive figure within right-wing political circles. Many DeSantis followers bought it, despite the photos’ authenticity being debunked.

This, of course, isn’t a new thing. People say that they want access to truthful information, but research suggests otherwise. For example, when researching misinformation in 2020, Australian analysts found that “unless great care is taken, any effort to debunk misinformation can inadvertently reinforce the very myths one seeks to correct.” Demand for information that confirms personal prejudices are rampant. Loosely regulating AI is not going to quench that thirst