Europe has spent two years trying to adopt comprehensive AI regulation. The AI Act, first introduced by the European Commission in 2021, aspires to regulate AI models based on different risk categories.

The proposed law would ban dangerous models outright, such as those that might manipulate humans, and mandate strict oversight and transparency for powerful models that carry the risk of harm. For lower-risk models, the AI Act would require simple disclosures. In May, the European Parliament approved the legislation, but the three bodies of the European legislature are still in the middle of hammering out the final text. The makers of generative AI models, like the one powering ChatGPT, would have to submit to safety checks and publish summaries of the copyrighted material they’re trained on.

Bump in the road: Last week, France, Germany, and Italy dealt the AI Act a setback by reaching an agreement that supports “mandatory self-regulation through codes of conduct" for AI developers building so-called foundation models. These are the models that are trained on massive sets of data and can be used for a wide range of applications, including OpenAI’s GPT-4, the large language model that powers ChatGPT. This surprise deal represents a desire to bolster European AI firms at the expense of the effort to hold them legally accountable for their products.

The view of these countries, three of the most powerful in the EU, is that the application of AI should be regulated, not the technology itself, which is a departure from the EU’s existing plan to regulate foundation models. While the tri-country proposal would require developers to publish information about safety tests, it doesn’t demand penalties for withholding that information — though it suggests that sanctions could be introduced.

A group of tech companies, including Apple, Ericson, Google, and SAP signed a letter backing the proposal: “Let's not regulate [AI] out of existence before they get a chance to scale, or force them to leave," the group wrote.

But it angered European lawmakers who favor the AI Act. “This is a declaration of war," one member of the European Parliament told Politico, which suggested that this “power grab” could even end progress on the AI Act altogether. A fifth round of European trilogue discussions is set for Dec. 6, 2023.

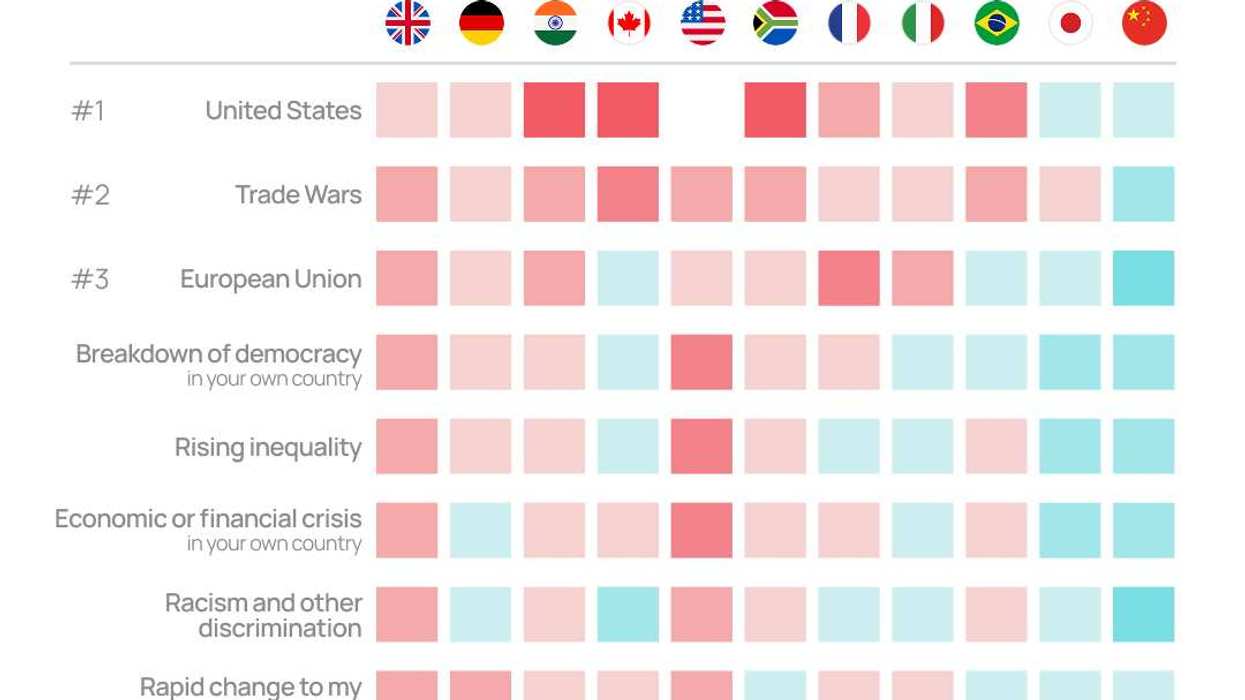

EU regulators have grown hungry to regulate AI, a counter to the more laissez-faire approach of the United Kingdom under Prime Minister Rishi Sunak, whose recent Bletchley Declaration, signed by countries including the US and China, was widely considered nonbinding and light-touch. Now, the three largest economies in Europe, France, Germany, and Italy, have brought that thinking to EU negotiations — and they need to be appeased. Europe’s Big Three not only carry political weight but can form a blocking minority in the Council of Europe if there’s a vote, says Nick Reiners, senior geotechnology analyst at Eurasia Group.

Reiners says this thrown-wrench by the three makes it unlikely that the AI Act’s text will be agreed upon by the original target date of Dec. 6. But there is still strong political will on both sides, he says, to reach a compromise before next June’s European Parliament elections.