Last week, Meta CEO Mark Zuckerberg announced his intention to build artificial general intelligence, or AGI — a standard whereby AI will have human-level intelligence in all fields – and said Meta will have 350,000 high-powered NVIDIA graphics chips by the end of the year.

Zuckerberg isn’t alone in his intentions – Meta joins a long list of tech firms trying to build a super-powered AI. But he is alone in saying he wants to make Meta’s AGI open-source. “Our long-term vision is to build general intelligence, open source it responsibly, and make it widely available so everyone can benefit,” Zuckerberg said. Um, everyone?

Critics have serious concerns with the advent of the still-hypothetical AGI. Publishing such technology on the open web is a whole other story. “In the wrong hands, technology like this could do a great deal of harm. It is so irresponsible for a company to suggest it.” University of Southampton professor Wendy Hall, who advises the UN on AI issues, told The Guardian. She added that it is “really very scary” for Zuckerberg to even consider it.

Unpacking Meta’s shift in AI focus

Meta has been developing artificial intelligence for more than a decade. The company first hired the esteemed academic Yann LeCun to helm a research lab originally called FAIR, or Facebook Artificial Intelligence Research, and now called Meta AI. LeCun, a Turing Award-winning computer scientist, splits his time between Meta and his professorial post at New York University.

But even with LeCun behind the wheel, most of Meta’s AI work was meant to supercharge its existing products — namely, its social media platforms, Facebook and Instagram. That included the ranking and recommendation algorithms for the apps’ news feeds, image recognition, and its all-important advertising platform. Meta makes most of its money on ads, after all.

While Meta is a closed ecosystem for users posting content or advertisers buying ad space, they’re considerably more open on the technical side. “They're a walled garden for advertisers, but they've always pitched themselves as an open platform when it comes to tech,” said Yoram Wurmser, a principal analyst at Insider Intelligence. “They explicitly like to differentiate themselves in that regard from other tech companies, particularly Apple, which is very guarded about their software platforms.” Differentiation like that can help Meta attract talent from elsewhere in Silicon Valley, but especially from academia, where open-source publishing is the standard – as opposed to proprietary research that might never even see the light of day.

Opening the door

In building its generative AI models early last year, the decision to go open-source, publishing the code of its LLaMA language model for all to use, was born out of FOMO (fear of missing out) and frustration. In early 2023, OpenAI was getting all of the buzz for its groundbreaking chatbot ChatGPT, and Meta — a Silicon Valley stalwart that’s been in the AI game for more than a decade — reportedly felt left behind.

So LeCun proposed going open-source for its large language model (once called Genesis and renamed to the infinitely more catchy LLaMA). Meta’s legal team cautioned it could put Meta further in the crosshairs of regulators, who might be concerned about such a powerful codebase living on the open internet, where bad actors — criminals and foreign adversaries — could leverage it. Feeling the heat and the urgency of the moment for attracting talent, hype, and investor fervor, Zuckerberg agreed with LeCun, and Meta released its original LLaMA model in February 2023. Meta has since released LLaMA 2 in partnership with OpenAI backer Microsoft in July, and has publicly confirmed it’s working on the next iteration, LLaMA 3.

Pros and cons of being an open book

Meta is one of the few AI-focused firms currently making their models open-source. There’s also the US-based startup HuggingFace, which oversaw the development of a model called Bloom, and the French firm Mistral AI, which has multiple open-source models. But Meta is the only established Silicon Valley giant pursuing this high-risk route head-on.

The potential reward is clear: Open-source development might help Meta attract top engineers, and its accessibility could make it the default system for tinkerers unwilling or unable to shell out for enterprise versions of OpenAI’s GPT-4. “It also gets a lot of people to do free labor for Meta,” said David Evan Harris, a public scholar at UC Berkeley and a former research manager for responsible AI at Meta. “It gets a lot of people to play with that model, find ways to optimize it, find ways of making it more efficient, find ways of making it better.” Open-source software encourages innovation and can enable smaller companies or independent developers to build out new applications that might’ve been cost-prohibitive otherwise

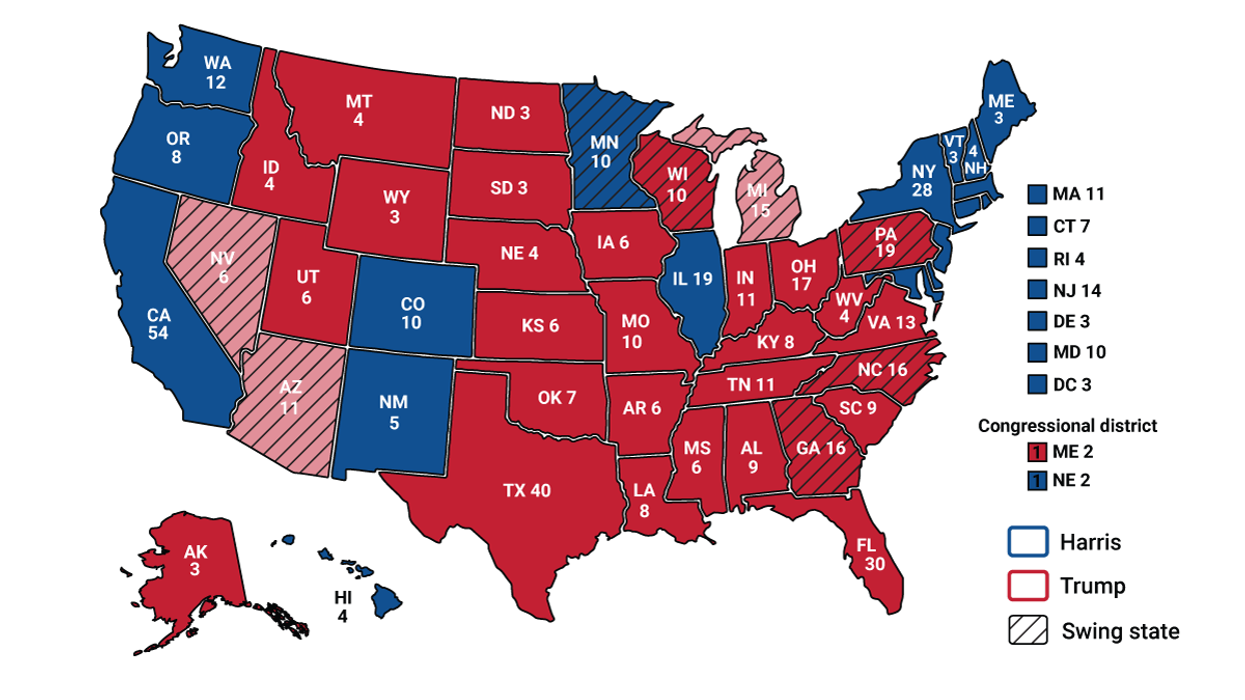

But the risk is clear too: When you publish software on the internet, anyone can use it. That means criminals could use open models to perpetuate scams and fraud, and generate misinformation or non-consensual sexual material. And, of pressing interest to the US, foreign adversaries will have unfettered access too. Harris says that an open-source language model is a “dream tool” for people trying to sow discord around elections further, deceive voters, and instill distrust in reliable democratic systems.

Regulators have already expressed concern: US Sens. Josh Hawley and Richard Blumenthal sent a letter to Meta last summer demanding answers about its language model. “By purporting to release LLaMA for the purpose of researching the abuse of AI, Meta effectively appears to have put a powerful tool in the hands of bad actors to actually engage in such abuse without much discernable forethought, preparation, or safeguards,” they wrote.

The Biden administration directed the Commerce Department in its October AI executive order to investigate the risk of “widely available” models. “When the weights for a dual-use foundation model are widely available — such as when they are publicly posted on the Internet — there can be substantial benefits to innovation, but also substantial security risks, such as the removal of safeguards within the model,” the order says.

Open-source purists might say that what Meta is doing is not truly open-source because it has usage restrictions: For example, they don’t allow the model to be used by companies with 700 million monthly users without a license or by anyone who doesn’t disclose “known dangers” to users. But these restrictions are merely warnings without a real method of enforcement, Harris says: “The threat of lawsuit is the enforcement.”

That might deter Meta’s biggest corporate rivals, such as Google or TikTok, from pilfering the company’s code to boost their own work, but it’s unlikely to deter criminals or malicious foreign actors.

Meta is reorienting its ambitions around artificial intelligence. Yes, Meta has bet big on the metaverse, an all-encompassing digital world powered by virtual and augmented reality technology, going so far as to change its official name from Facebook to reflect its ambitions. But the metaverse hype has been largely replaced by AI hype, and Meta doesn’t want to be left behind — certainly not for something it’s been working on for a long time.