VIDEOSGZERO World with Ian BremmerQuick TakePUPPET REGIMEIan ExplainsGZERO ReportsAsk IanGlobal Stage

Site Navigation

Search

Human content,

AI powered search.

Latest Stories

Sign up for GZERO Daily.

Get our latest updates and insights delivered to your inbox.

Global Stage: Live from Davos

WATCH

GZERO AI

The latest on artificial intelligence and its implications - from the GZERO AI newsletter.

Presented by

Taylor Owen, professor at the Max Bell School of Public Policy at McGill University and director of its Centre for Media, Technology & Democracy, co-hosts GZERO AI, our new weekly video series intended to help you keep up and make sense of the latest news on the AI revolution. In this episode of the series, Taylor Owen takes a look at the rise of AI agents.

Today I want to talk about a recent big step towards the world of AI agents. Last week, OpenAI, the company behind ChatGPT, announced that users can now create their own personal chatbots. Prior to this, tools like ChatGPT were primarily useful because they could answer users' questions, but now they can actually perform tasks. They can do things instead of just talking about them. I think this really matters for a few reasons. First, AI agents are clearly going to make some things in our life easier. They're going to help us book travel, make restaurant reservations, manage our schedules. They might even help us negotiate a raise with our boss. But the bigger news here is that private corporations are now able to train their own chatbots on their own data. So a medical company, for example, could use personal health records to create virtual health assistants that could answer patient inquiries, schedule appointments or even triage patients.

Second, this I think, could have a real effect on labor markets. We've been talking about this for years, that AI was going to disrupt labor, but it might actually be the case soon. If you have a triage chatbot for example, you might not need a big triage center, and therefore you'd need less nurses and you'd need less medical staff. But having AI in the workplace could also lead to fruitful collaboration. AI is becoming better than humans at breast cancer screening, for example, but humans will still be a real asset when it comes to making high stakes life or death decisions or delivering bad news. The key point here is that there's a difference between technology that replaces human labor and technology that supplements it. We're at the very early stages of figuring out the balance.

And third, AI Safety researchers are worried about these new kinds of chatbots. Earlier this year, the Center for AI Safety listed autonomous agents as one of its catastrophic AI risks. Imagine a chatbot being programmed with incorrect medical data, triaging patients in the wrong order. This could quite literally be a matter of life or death. These new agents are clear demonstration of the disconnect that's increasingly growing between the pace of AI development, the speed with which new tools are being developed and let loose on society, and the pace of AI regulation to mitigate the potential risks. At some point, this disconnect could just catch up with us. The bottom line though is that AI agents are here. As a society, we better start preparing for what this might mean.

I'm Taylor Owen, and thanks for watching.

Keep reading...Show less

More from GZERO AI

What we learned from a week of AI-generated cartoons

April 01, 2025

Nvidia delays could slow down China at a crucial time

April 01, 2025

North Korea preps new kamikaze drones

April 01, 2025

Apple faces false advertising lawsuit over AI promises

March 25, 2025

The Vatican wants to protect children from AI dangers

March 25, 2025

Europe hungers for faster chips

March 25, 2025

How DeepSeek changed China’s AI ambitions

March 25, 2025

Inside the fight to shape Trump’s AI policy

March 18, 2025

Europol warns of AI-powered organized crime

March 18, 2025

Europe’s biggest companies want to “Buy European”

March 18, 2025

Beijing calls for labeling of generative AI

March 18, 2025

The new AI threats from China

March 18, 2025

DeepSeek says no to outside investment — for now

March 11, 2025

Palantir delivers two key AI systems to the US Army

March 11, 2025

China announces a state-backed AI fund

March 11, 2025

Did Biden’s chip rules go too far?

March 04, 2025

China warns AI executives over US travel

March 04, 2025

Trump cuts come to the National Science Foundation

March 04, 2025

The first AI copyright win is here — but it’s limited in scope

February 25, 2025

Adobe’s Firefly is impressive and promises it’s copyright-safe

February 25, 2025

OpenAI digs up a Chinese surveillance tool

February 25, 2025

Trump plans firings at NIST, tasked with overseeing AI

February 25, 2025

Silicon Valley and Washington push back against Europe

February 25, 2025

France puts the AI in laissez-faire

February 18, 2025

Meta’s next AI goal: building robots

February 18, 2025

Intel’s suitors are swarming

February 18, 2025

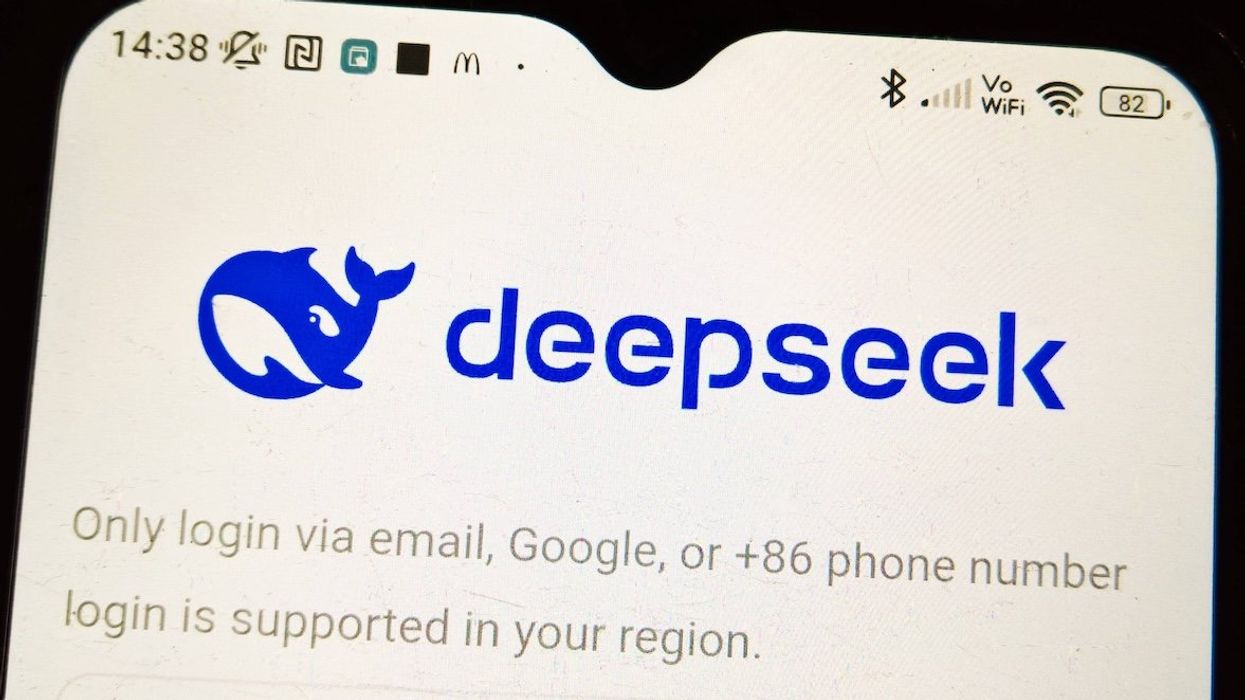

South Korea halts downloads of DeepSeek

February 18, 2025

Elon Musk’s government takeover is powered by AI

February 11, 2025

First US DeepSeek ban could be on the horizon

February 11, 2025

France’s nuclear power supply to fuel AI

February 11, 2025

Christie’s plans its first AI art auction

February 11, 2025

Elon Musk wants to buy OpenAI

February 11, 2025

JD Vance preaches innovation above all

February 11, 2025

AI pioneers share prestigious engineering prize

February 04, 2025

Britain unveils new child deepfake law

February 04, 2025

OpenAI strikes a scientific partnership with US National Labs

February 04, 2025

Europe’s AI Act starts to take effect

February 04, 2025

Is DeepSeek the next US national security threat?

February 04, 2025

OpenAI launches ChatGPT Gov

January 28, 2025

An AI weapon detection system failed in Nashville

January 28, 2025

An Oscar for AI-enhanced films?

January 28, 2025

What DeepSeek means for the US-China AI war

January 28, 2025

What Stargate means for Donald Trump, OpenAI, and Silicon Valley

January 28, 2025

Trump throws out Biden’s AI executive order

January 21, 2025

Can the CIA’s AI chatbot get inside the minds of world leaders?

January 21, 2025

Doug Burgum’s coal-filled energy plan for AI

January 21, 2025

Day Two: The view for AI from Davos

January 21, 2025

Is the TikTok threat really about AI?

January 21, 2025

Biden wants AI development on federal land

January 14, 2025

Biden has one week left. His chip war with China isn’t done yet.

January 14, 2025

British PM wants sovereign AI

January 14, 2025

Automation is coming. Are you ready?

January 14, 2025

OpenAI offers its vision to Washington

January 14, 2025

Meta wants AI users — but maybe not like this

January 07, 2025

CES will be all about AI

January 07, 2025

Questions remain after sanctions on a Russian disinformation network

January 07, 2025

5 AI trends to watch in 2025

January 07, 2025

AI companies splash the cash around for Trump’s inauguration fund

December 17, 2024

Trump wades into the dockworkers dispute over automation

December 17, 2024

The world of AI in 2025

December 17, 2024

2024: The Year of AI

December 17, 2024

Microsoft gets OK to send chips to the UAE

December 10, 2024

Nvidia forges deals in American Southwest and Southeastern Asia

December 10, 2024

The AI military-industrial complex is here

December 10, 2024

Biden tightens China’s access to chips one last time

December 03, 2024

Intel is ready to move forward — without its CEO

December 03, 2024

Amazon is set to announce its newest AI model

December 03, 2024

Can OpenAI reach 1 billion users?

December 03, 2024

Will AI companies ever be profitable?

November 26, 2024

The US is thwarting Huawei’s chip ambitions

November 26, 2024

The AI energy crisis looms

November 26, 2024

Amazon’s grand chip plans

November 26, 2024

GZERO Series

GZERO Daily: our free newsletter about global politics

Keep up with what’s going on around the world - and why it matters.