The European Parliament passed the Artificial Intelligence Act on March 13, becoming the world’s first major government to pass comprehensive regulations for the emerging technology. This capped a five-year effort to manage AI and its potential to disrupt every industry and cause geopolitical tensions.

The AI Act, which takes effect later this year, places basic transparency requirements on generative AI models such as OpenAI’s GPT-4, mandating that their makers share some information about how they are trained. There are more stringent rules for more powerful models or ones that will be used in sensitive sectors, such as law enforcement or critical infrastructure. Like with the EU’s data privacy law, there are steep penalties for companies that violate the new AI legislation – up to 7% of their annual global revenue.

GZERO spoke with Eva Maydell, a Bulgarian member of the European Parliament on the Committee on Industry, Research, and Energy, who negotiated the details of the AI Act. We asked her about the imprint Europe is leaving on global AI regulation.

GZERO: What drove you to spearhead work on AI in the European Parliament?

MEP Eva Maydell: It’s vital that we not only tackle the challenges and opportunities of today but those of tomorrow. That way, we can ensure that Europe is its most resilient and prepared. One of the most interesting and challenging aspects of being a politician that works on tech policy is trying to reach the right balance between enabling innovation and competitiveness with ensuring we have the right protections and safeguards in place. Artificial intelligence has the potential to change the world we live in, and having the opportunity to work on such an impactful piece of law was a privilege and a responsibility.

How do you think the AI Act balances regulation with innovation? Can Europe become a standard-setter for the AI industry while also encouraging development and progress within its borders?

Maydell: I fought very hard to ensure that innovation remained a strong feature of the AI Act. However, the proof of the pudding is in the eating. We must acknowledge that Europe has some catching up to do. AI take-up by European companies is 11%. Europeans rely on foreign countries for 80% of digital products and services. We also have to tackle inflation and stagnating growth. AI has the potential to be the engine for innovation, creativity, and prosperity, but only if we ensure that we keep working on all the other important pieces of the puzzle, such as a strong single market and greater access to capital.

The pace of AI is evolving rapidly. Does the AI Act set Europe up to be responsive to unforeseen advancements in technology?

Maydell: One of the most difficult aspects of regulating technology is trying to regulate the unknown. However, this is why it’s essential to stick to principles rather than over-prescription wherever possible - for example, a risk-based approach, and where possible aligning with international standards. This allows you the ability to adapt. It is also why the success of the AI Office and AI Forum will be so important. The guidance that we offer businesses and organizations in the coming months on how to implement the AI Act, will be key to its long-term success. Beyond the pages of the AI Act, we need to think about technological foresight. This is why I launched an initiative at the 60th annual Munich Security Conference – the “Council on the Future.” It aims to bridge the foresight and collaboration gap between the public and private sector with a view toward enabling the thoughtful stewardship of technology.

Europe is the first mover on AI regulation. How would you like to see the rest of the world follow suit and pass their own laws? How can Europe be an example to other countries?

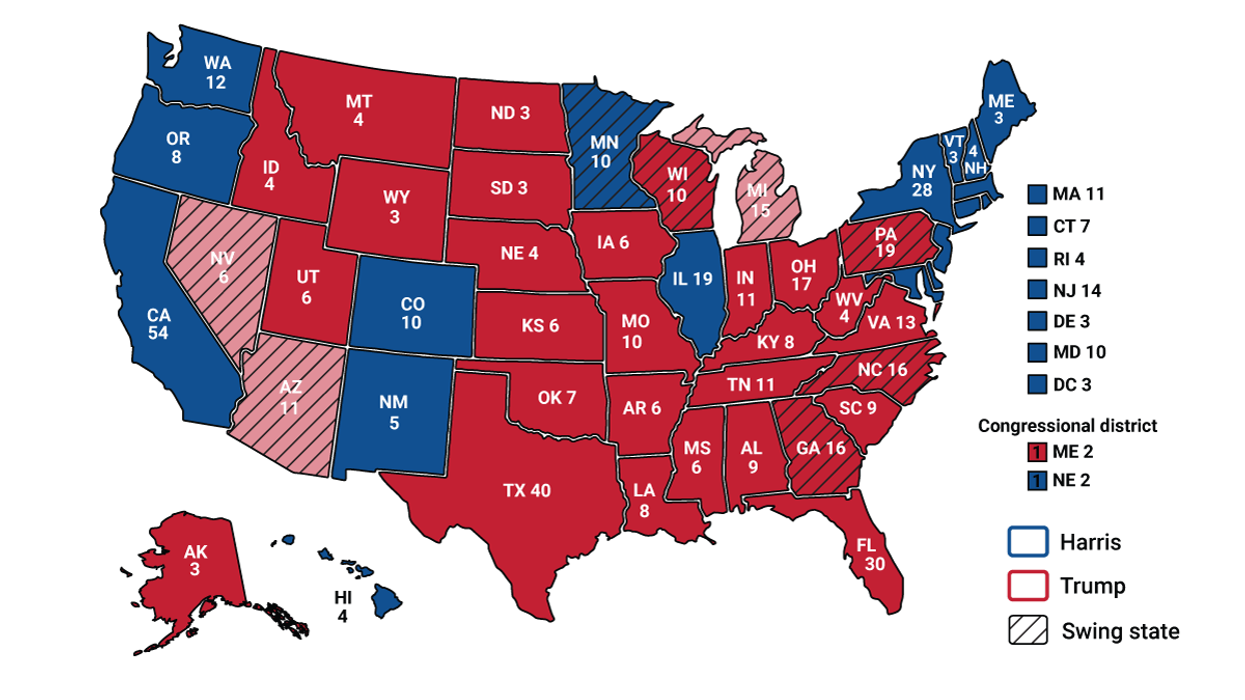

Maydell: I hope we’re an example to others in the sense that we have tried to take a responsible approach to the development of AI. We are already seeing nations around the world take important steps towards shaping their own governance structures for AI. We have the Executive Order in the US and the UK had the AI Safety Summit. It is vital that like-minded nations are working together to ensure that there is broader coherence around the values associated with the development and use of our technologies. Deeper collaboration through the G7, the UN, and the OECD is something we must continue to pursue.

Is there anything the AI Act doesn't do that you'd like to turn your attention to next?

Maydell: The AI Act is not a silver bullet, but it is an important piece of a much bigger puzzle. We have adopted an unprecedented amount of digital legislation in the last five years. With these strong regulatory foundations in place, my hope is that we now focus on perhaps the less newsworthy but equally important issue of good implementation. This means cutting red tape, reducing existing excess bureaucracy, and removing any frictions or barriers between different EU laws in the digital space. The more clarity and certainty we can offer companies, the more likely it is that Europe will attract inward investment and be the birthplace of some of the biggest names in global tech.