VIDEOSGZERO World with Ian BremmerQuick TakePUPPET REGIMEIan ExplainsGZERO ReportsAsk IanGlobal Stage

Site Navigation

Search

Human content,

AI powered search.

Latest Stories

Sign up for GZERO Daily.

Get our latest updates and insights delivered to your inbox.

Global Stage: Live from Davos

WATCH

GZERO AI

The latest on artificial intelligence and its implications - from the GZERO AI newsletter.

Presented by

Marietje Schaake, International Policy Fellow, Stanford Human-Centered Artificial Intelligence, and former European Parliamentarian, co-hosts GZERO AI, our new weekly video series intended to help you keep up and make sense of the latest news on the AI revolution. In this episode, she talks about the potential pitfalls of the imminent EU AI Act and the sudden resistance that could jeopardize it altogether.

After a weekend full of drama around OpenAI, it is now time to shift to another potentially dramatic conclusion of an AI challenge, namely the EU AI Act, that's entering its final phase. And this week, the Member States of the EU will decide on their position. And there is sudden resistance coming from France and Germany in particular, to including foundation models in the EU AI Act. And I think that is a mistake. I think it is crucial for a safe but also competitive and democratically governed AI ecosystem that foundation models are actually part of the EU AI Act, which would be the most comprehensive AI law that the democratic world has put forward. So, the world is watching, and it is important that EU leaders understand that time is really of the essence if we look at the speed of development of artificial intelligence and in particular, generative AI.

And actually, that speed of development is what's kind of catching up now with the negotiators, because in the initial phase, the European Commission had designed the law to be risk-based when we look at the outcomes of AI applications. So, if AI is used to decide on whether to hire someone or give them access to education or social benefits, the consequences for the individual impacted can be significant and so, proportionate to the risk, mitigating measures should be in place. And the law was designed to include anything from very low or no-risk applications to high and unacceptable risk of applications, such as a social credit scoring system as unacceptable, for example. But then when generative AI products started flooding the market, the European Parliament, which was taking its position, decided, “We need to look at the technology as well. We cannot just look at the outcomes.” And I think that that is critical because foundation models are so fundamental. Really, they form the basis of so much downstream use that if there are problems at that initial stage, they ripple through like an earthquake in many, many applications. And if you don't want startups or downstream users to be confronted with liability or very high compliance costs, then it's also important to start at the roots and make sure that sort of the core ingredients of the uses of these AI models are properly governed and that they are safe and okay to use.

So, when I look ahead at December, when the European Commission, the European Parliament and Member States come together, I hope negotiators will look at the way in which foundation models can be regulated, that it is not a yes or no to regulation, but it's a progressive work tiered approach that really attaches the strongest mitigating or scrutiny measures to the most powerful players. The way that has been done in many other sectors. It would be very appropriate for AI foundation models, as well. There's a lot of debate going on. Open letters are being penned, op-ed experts are speaking out, and I'm sure there is a lot of heated debate between Member States of the European Union. I just hope that the negotiators appreciate that the world is watching. Many people with great hope as to how the EU can once again regulate on the basis of its core values, and that with what we now know about how generative AI is built upon these foundation models, it would be a mistake to overlook them in the most comprehensive EU AI law.

Keep reading...Show less

More from GZERO AI

What we learned from a week of AI-generated cartoons

April 01, 2025

Nvidia delays could slow down China at a crucial time

April 01, 2025

North Korea preps new kamikaze drones

April 01, 2025

Apple faces false advertising lawsuit over AI promises

March 25, 2025

The Vatican wants to protect children from AI dangers

March 25, 2025

Europe hungers for faster chips

March 25, 2025

How DeepSeek changed China’s AI ambitions

March 25, 2025

Inside the fight to shape Trump’s AI policy

March 18, 2025

Europol warns of AI-powered organized crime

March 18, 2025

Europe’s biggest companies want to “Buy European”

March 18, 2025

Beijing calls for labeling of generative AI

March 18, 2025

The new AI threats from China

March 18, 2025

DeepSeek says no to outside investment — for now

March 11, 2025

Palantir delivers two key AI systems to the US Army

March 11, 2025

China announces a state-backed AI fund

March 11, 2025

Did Biden’s chip rules go too far?

March 04, 2025

China warns AI executives over US travel

March 04, 2025

Trump cuts come to the National Science Foundation

March 04, 2025

The first AI copyright win is here — but it’s limited in scope

February 25, 2025

Adobe’s Firefly is impressive and promises it’s copyright-safe

February 25, 2025

OpenAI digs up a Chinese surveillance tool

February 25, 2025

Trump plans firings at NIST, tasked with overseeing AI

February 25, 2025

Silicon Valley and Washington push back against Europe

February 25, 2025

France puts the AI in laissez-faire

February 18, 2025

Meta’s next AI goal: building robots

February 18, 2025

Intel’s suitors are swarming

February 18, 2025

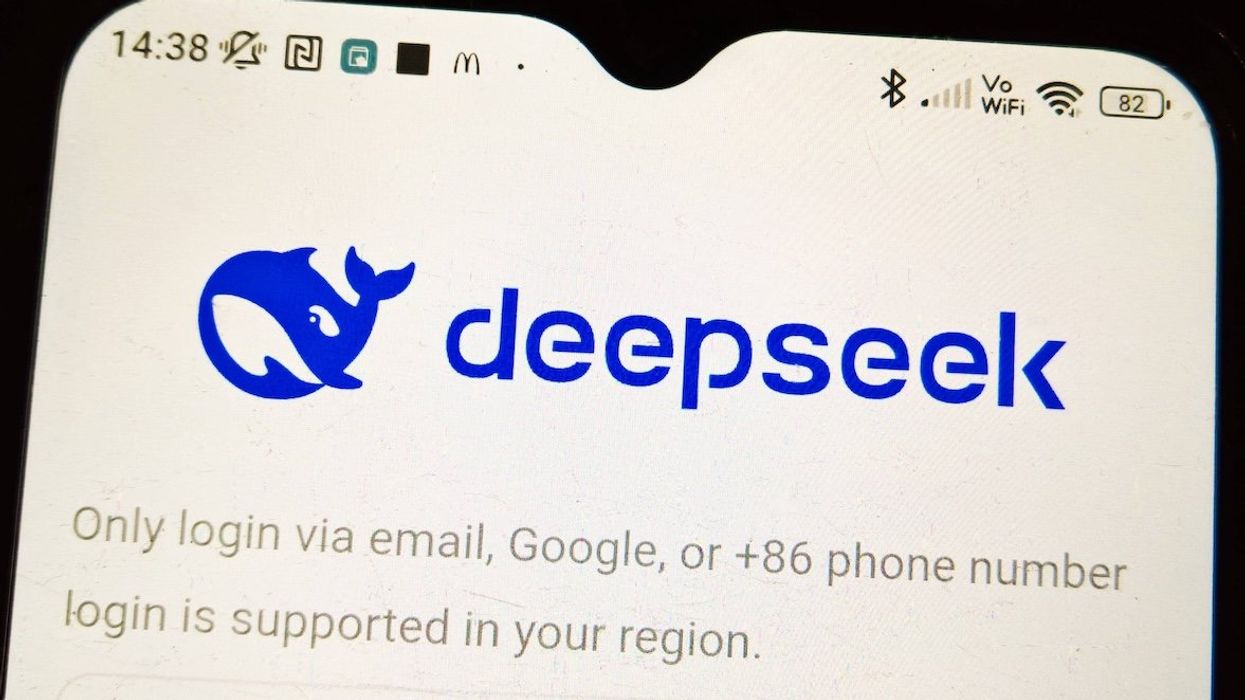

South Korea halts downloads of DeepSeek

February 18, 2025

Elon Musk’s government takeover is powered by AI

February 11, 2025

First US DeepSeek ban could be on the horizon

February 11, 2025

France’s nuclear power supply to fuel AI

February 11, 2025

Christie’s plans its first AI art auction

February 11, 2025

Elon Musk wants to buy OpenAI

February 11, 2025

JD Vance preaches innovation above all

February 11, 2025

AI pioneers share prestigious engineering prize

February 04, 2025

Britain unveils new child deepfake law

February 04, 2025

OpenAI strikes a scientific partnership with US National Labs

February 04, 2025

Europe’s AI Act starts to take effect

February 04, 2025

Is DeepSeek the next US national security threat?

February 04, 2025

OpenAI launches ChatGPT Gov

January 28, 2025

An AI weapon detection system failed in Nashville

January 28, 2025

An Oscar for AI-enhanced films?

January 28, 2025

What DeepSeek means for the US-China AI war

January 28, 2025

What Stargate means for Donald Trump, OpenAI, and Silicon Valley

January 28, 2025

Trump throws out Biden’s AI executive order

January 21, 2025

Can the CIA’s AI chatbot get inside the minds of world leaders?

January 21, 2025

Doug Burgum’s coal-filled energy plan for AI

January 21, 2025

Day Two: The view for AI from Davos

January 21, 2025

Is the TikTok threat really about AI?

January 21, 2025

Biden wants AI development on federal land

January 14, 2025

Biden has one week left. His chip war with China isn’t done yet.

January 14, 2025

British PM wants sovereign AI

January 14, 2025

Automation is coming. Are you ready?

January 14, 2025

OpenAI offers its vision to Washington

January 14, 2025

Meta wants AI users — but maybe not like this

January 07, 2025

CES will be all about AI

January 07, 2025

Questions remain after sanctions on a Russian disinformation network

January 07, 2025

5 AI trends to watch in 2025

January 07, 2025

AI companies splash the cash around for Trump’s inauguration fund

December 17, 2024

Trump wades into the dockworkers dispute over automation

December 17, 2024

The world of AI in 2025

December 17, 2024

2024: The Year of AI

December 17, 2024

Microsoft gets OK to send chips to the UAE

December 10, 2024

Nvidia forges deals in American Southwest and Southeastern Asia

December 10, 2024

The AI military-industrial complex is here

December 10, 2024

Biden tightens China’s access to chips one last time

December 03, 2024

Intel is ready to move forward — without its CEO

December 03, 2024

Amazon is set to announce its newest AI model

December 03, 2024

Can OpenAI reach 1 billion users?

December 03, 2024

Will AI companies ever be profitable?

November 26, 2024

The US is thwarting Huawei’s chip ambitions

November 26, 2024

The AI energy crisis looms

November 26, 2024

Amazon’s grand chip plans

November 26, 2024

GZERO Series

GZERO Daily: our free newsletter about global politics

Keep up with what’s going on around the world - and why it matters.