Paris Peace Forum

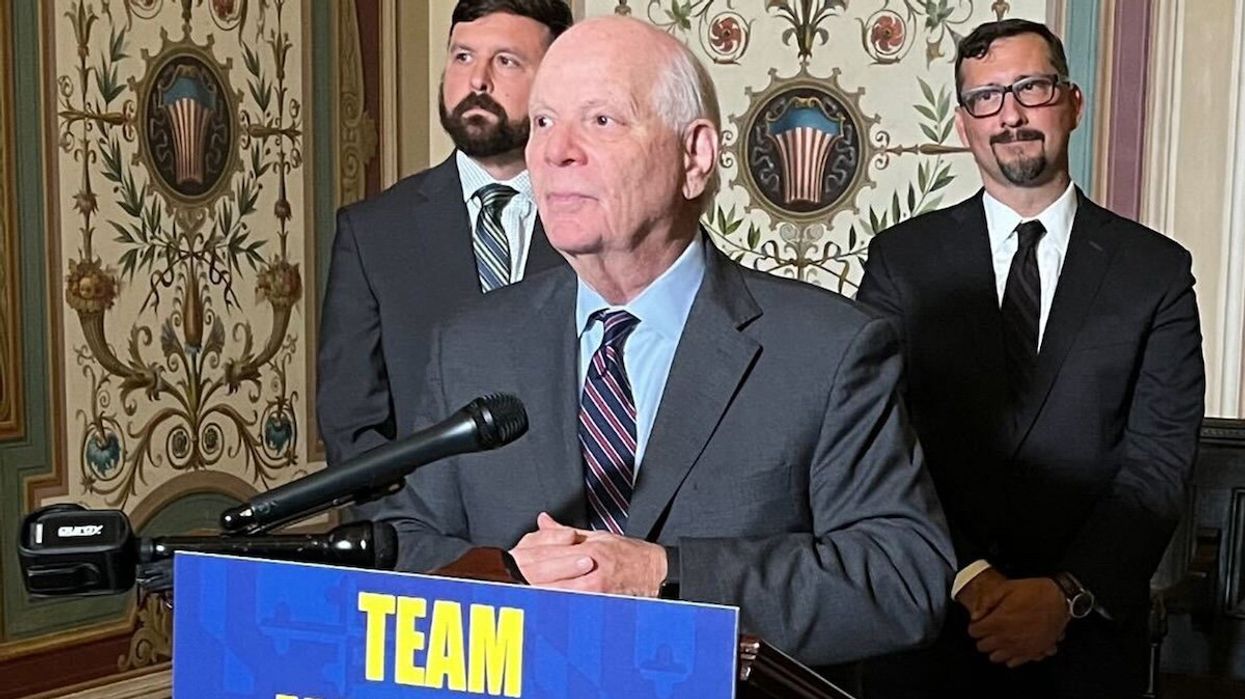

Rebuilding post-election trust in the age of AI

In a GZERO Global Stage discussion at the 7th annual Paris Peace Forum, Teresa Hutson, Corporate Vice President at Microsoft, reflected on the anticipated impact of generative AI and deepfakes on global elections. Despite widespread concerns, she noted that deepfakes did not significantly alter electoral outcomes. Instead, Hutson highlighted a more subtle effect: the erosion of public trust in online information, a phenomenon she referred to as the "liar's dividend."

Nov 12, 2024