Why is artificial intelligence a geopolitical risk?

It has the potential to disrupt the balance of power between nations. AI can be used to create new weapons, automate production, and increase surveillance capabilities, all of which can give certain countries an advantage over others. AI can also be used to manipulate public opinion and interfere in elections, which can destabilize governments and lead to conflict.

Your author did not write the above paragraph. An AI chatbot did. And the fact that the chatbot is so candid about the political mayhem it can unleash is quite troubling.

No wonder, then, that AI, powered by social media, is Eurasia Group’s No. 3 top risk for 2023. (Fun fact: The title, “Weapons of Mass Disruption,” was also generated in seconds by a ChatGPT bot.)

How big a threat to democracy is AI? Well, bots can't (yet) meddle in elections or peddle fake news to influence public opinion on their own. But authoritarians, populists, and opportunists can deploy AI to help do both of these things better and faster.

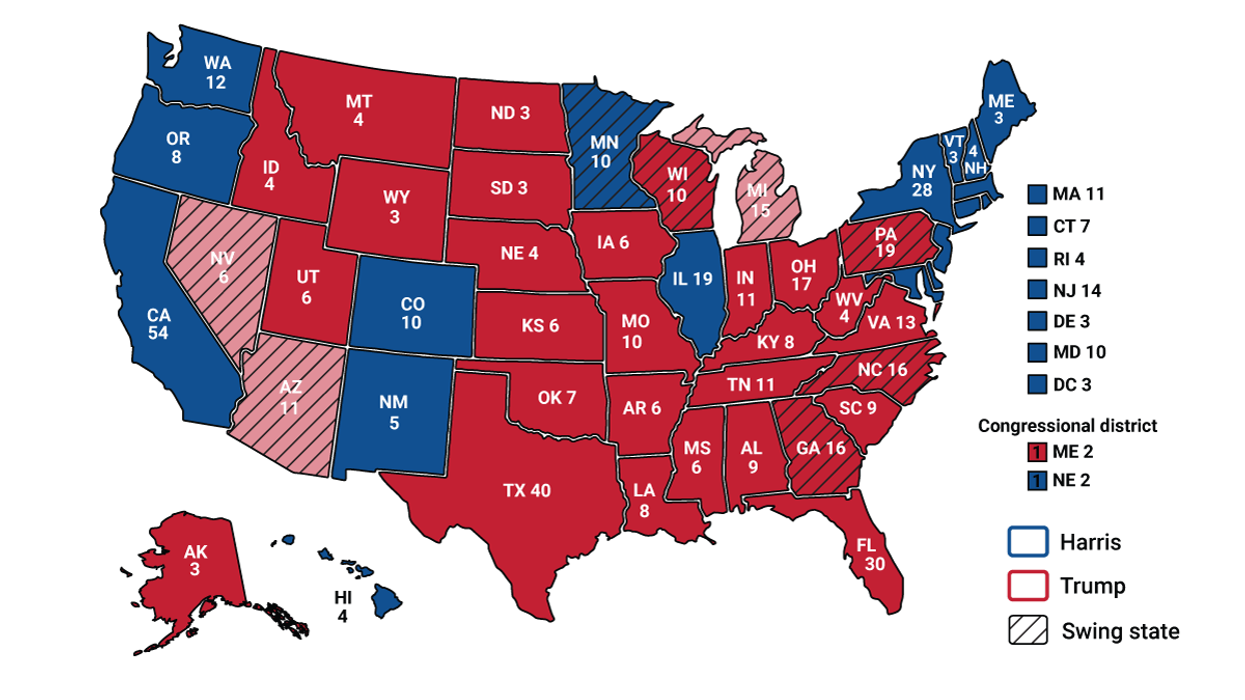

Philippine President Ferdinand Marcos Jr. relied heavily on his troll army on TikTok to win the votes of young Filipinos in the 2022 election. Automating the process with bots would allow him, or any politician with access to AI, to cast a wider net and leap into viral conversations almost immediately on a social platform that already runs on an AI-driven algorithm.

Another problem is deepfakes, videos of people whose faces or bodies are altered to make them appear as if they are someone else, typically intended for political disinformation (check out Jordan Peele's Obama). AI now makes them so well that they are very hard to spot. Indeed, DARPA — the same Pentagon agency that brought us the internet — is perfecting its own deepfakes in order to develop tech to help detect what’s real and what’s fake.

Still, the "smarter" AI gets at propagating lies on social media, and the more widespread its use by shameless politicians, the more dangerous AI becomes. By the time viral content is proven to be fake, it might already be too late.

Imagine, let's say, that supporters of Narendra Modi, India's Hindu nationalist PM, want to fire up the base by fanning sectarian flames. If AI can help them create a half-decent deepfake video of Muslims slaughtering a cow — a sacred animal for Hindus — that spreads fast enough, the anger might boil over before people check if the clip is real, if they even trust someone at all to independently verify it.

AI can also disrupt politics by getting bots to do stuff that only humans, however flawed, should. Indeed, automating the political decision-making process "can lead to biased outcomes and the potential for abuse of power," the bot explains.

That’s happening right now in China, an authoritarian state that dreams of dominating AI and is already using the tech in court. Once the robot judges are fully in sync with Beijing's Orwellian social credit system, it wouldn’t be a stretch for them to rule against people who've criticized Xi Jinping on social media.

So, what, if anything, can democratic governments do about this before AI ruins everything? The bot has some thoughts.

"Governments can protect democracy from artificial intelligence by regulating the use of AI, ensuring that it is used ethically and responsibly," it says. "This could include setting standards for data collection and usage, as well as ensuring that AI is not used to manipulate or influence public opinion."

Okay, but who should be doing the regulating, and how? For years, the UN has been working on a so-called digital Geneva Convention that would set global rules to govern cyberspace, including AI. But the talks have been bogged down by (surprise!) Russia, whose president, Vladimir Putin, warned way back in 2017 that the nation that leads in AI will rule the world.

Governments, the bot adds, “should also ensure that AI is transparent and accountable, and that its use is monitored and evaluated. Finally, [they] should ensure that AI is used to benefit society, rather than to undermine it."

The bot raises a fair point: AI can also do a lot of good for humanity. A good example is how machine learning can help make us live healthier and longer by detecting diseases earlier and improving certain surgeries.

But, as Eurasia Group's report underscores, "that's the thing with revolutionary technologies, from the printing press to nuclear fission and the internet — their power to drive human progress is matched by their ability to amplify humanity's most destructive tendencies."