VIDEOSGZERO World with Ian BremmerQuick TakePUPPET REGIMEIan ExplainsGZERO ReportsAsk IanGlobal Stage

Site Navigation

Search

Human content,

AI powered search.

Latest Stories

Start your day right!

Get latest updates and insights delivered to your inbox.

Top Risks of 2026

WATCH RECORDING

Economy

Global economy news and analysis from GZERO Media

Presented by

Ian Bremmer's Quick Take: Hi, everybody. Ian Bremmer here. A happy Monday to you. And a Quick Take today on artificial intelligence and how we think about it geopolitically. I'm an enthusiast, I want to be clear. I am more excited about AI as a new development to drive growth and productivity for 8 billion of us on this planet than anything I have seen since the internet, maybe since we invented the semiconductor. It's extraordinary how much that will apply human ingenuity faster and more broadly to challenges that exist today and those we don't even know about yet. But I'm not concerned about the upside in the sense that a huge amount of money is being devoted towards those companies. The people that run them are working as fast as they humanly can to get better and to unlock those opportunities and to also beat their competitors, get there faster. I'm worried about what happens that is more challenging, that we're not spending the resources on the consequences that will be more upsetting for populations from artificial intelligence. The ones that will require some level of government and other intervention or else. And I see four of them.

First is disinformation. We know that AI bots can be very confident and they're also frequently very wrong. And if you can no longer discern an AI bot from a human being in text and very soon in audio and in videos, then that means that you can no longer discern truth from falsehood. And that is not good news for democracies. It's actually good news for authoritarian countries that deploy artificial intelligence for their own political stability and benefit. But in a country like the United States or Canada or Europe or Japan, it's much more deeply corrosive. And I think that this is an area that unless we are able to put very clear labeling and restrictions on what is AI and what is not AI, we're going to be in very serious trouble in terms of the erosion of our institutions much faster than anything we've seen through social media or through cable news or through any of the other challenges that we've had in the information space.

Secondly, and relatedly, is proliferation. Proliferation of AI technologies by either bad actors or by tinkerers that don't have the knowledge and are indifferent to the chaos that they may sow. We today are in an environment with about a hundred human beings that have both the knowledge and the technology to deploy a smallpox virus. Don't do that, right? But very soon with AI, those numbers are going way up. And not just in terms of the creation of new dangerous viruses or lethal autonomous drones, but also in their ability to write malware and deploy it to take money from people or to destroy institutions or to undermine an election. All of these things in the hands not just of a small number of governments, but individuals that have a laptop and a little bit of programming skill is going to make it a lot harder to effectively respond. We saw some of this with the cyber, offensive cyber scare, which then of course created a lot of security and big industries around that to respond and lots of costs. That's what we're going to see with AI, but in every field.

Then you have the displacement risk. A lot of people have talked about this. It's a whole bunch of people that no longer have productive jobs because AI replaces them. I'm not particularly worried about this in the macro setting, in the sense that I believe that the number of jobs that will be created, new jobs, many of which we can't even think about right now, as well as the number of existing jobs that become much more productive because they are using AI effectively, will outweigh the jobs that are lost through artificial intelligence. But they're going to happen at the same time. And unless you have policies in place that help retrain and also economically just take care of the people that are displaced in the nearest-term, those people get angrier. Those people become much more supportive of anti-establishment politicians. They become much angrier and feel like their existing political leaders are illegitimate. We've seen this through free trade and hollowing out of middle classes. We've seen it through automation and robotics. It's going to be a lot faster, a lot broader with AI.

And then finally, and the one that I worry about the most and it doesn't get enough attention, is the replacement risk. The fact that so many human beings will replace relationships they have with other human beings. They'll replace them with AI. And they may be doing this knowledgeably, they may be doing this without knowledge. But, I mean, certainly I see how much in early-stage AI bots’ people are developing actual relationships with these things, particularly young people. And we as humans need communities and families and parents that care about us and take care about us to become social adaptable animals.

And when that's happening through artificial intelligence that not only doesn't care about us, but also doesn't have human beings as a principal interest, principle interest is the business model and the human beings are very much subsidiary and not necessarily aligned, that creates a lot of dysfunction. I fear that a level of dehumanization that could come very, very quickly, especially for young people through addictions and antisocial relationships with AI, which we'll then try to fix through AI bots that can do therapy, is a direction that we really don't want to head on this planet. We will be doing real-time experimentation on human beings. And we never do that with a new GMO food. We never do that with a new vaccine, even when we're facing a pandemic. We shouldn't be doing that with our brains, with our persons, with our souls. And I hope that that gets addressed real fast.

So anyway, that's a little bit for me and the geopolitics of AI, something I'm writing about, thinking about a lot these days. And I hope everyone's well, and I'll talk to you all real soon.

Keep reading...Show less

More from Economy

Venezuela’s new leadership?

January 06, 2026

Tools and Weapons – In Conversation with Ed Policy

January 06, 2026

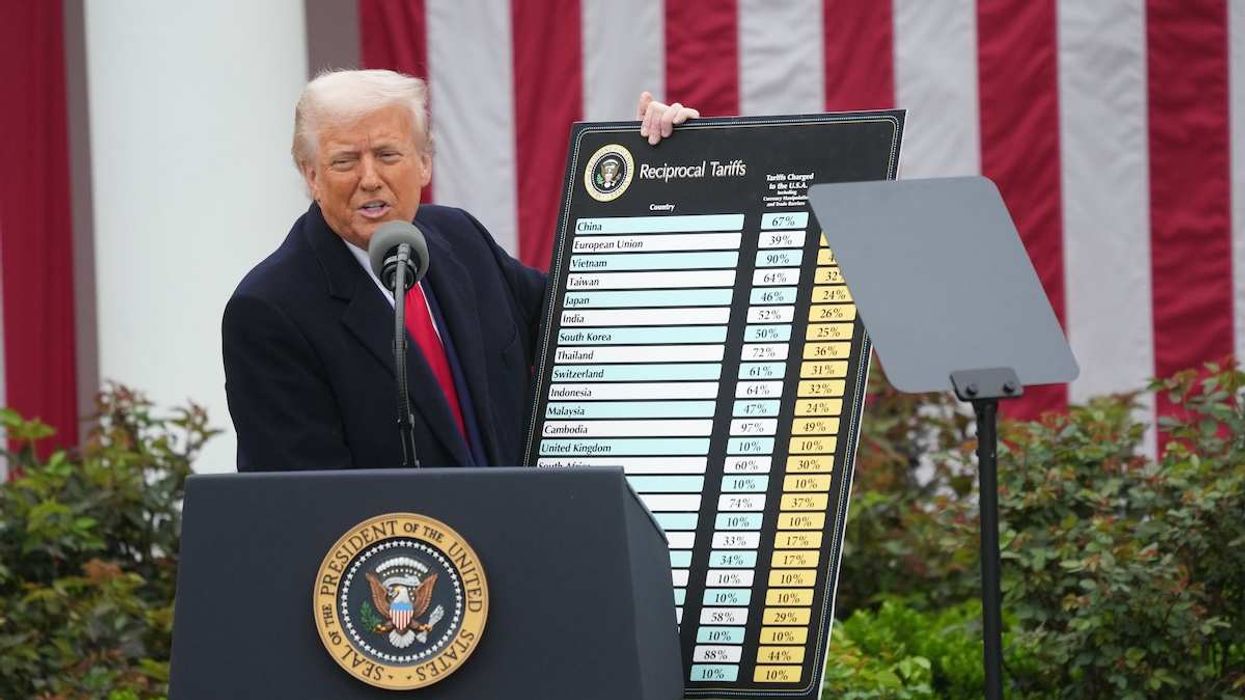

Trump’s “Eff around and find out” world

January 06, 2026

Dealing with Delcy: Regime change without changing the regime

January 06, 2026

The biggest geopolitical risks of 2026 revealed

January 05, 2026

Where things stand with Venezuela: Washington makes its demands

January 05, 2026

Venezuela after Maduro: the key questions now.

January 03, 2026

Hard numbers: Venezuela edition

January 03, 2026

Quick Take

Jan 03, 2026

Puppet Regime

Dec 31, 2025

Geopolitical uncertainty? Oil markets no longer care

December 30, 2025

ask ian

Dec 29, 2025

A year after Assad’s fall, can Syria hold together?

December 29, 2025

Economic Trends Shaping 2026: Trade, AI, Small Business

December 27, 2025

Protests against AI rock the North Pole

December 24, 2025

Beyond Gaza and Ukraine: The wars the world is ignoring

December 23, 2025

Revisiting the top geopolitical risks of 2025

December 23, 2025

Top risks of 2025, reviewed

December 23, 2025

Israel is still banning foreign media from entering Gaza

December 23, 2025

Is the US heading toward military strikes in Venezuela?

December 22, 2025

The top geopolitical stories of 2025

December 22, 2025

Trump to Zelensky: Just keep it light bro

December 22, 2025

GZERO World with Ian Bremmer

Dec 22, 2025

Economic Trends Shaping 2026: Trade, AI, Small Business

December 21, 2025

War and Peace in 2025, with Clarissa Ward and Comfort Ero

December 20, 2025

Ian Explains

Dec 19, 2025

A former immigration chief weighs in on Trump’s second act

December 19, 2025

You vs. the News: A Weekly News Quiz - December 19, 2025

December 19, 2025

Trump is at risk of falling into the Biden trap on the economy

December 18, 2025

Europe’s moment of truth

December 17, 2025

Top 10 Quotes from GZERO World with Ian Bremmer in 2025

December 17, 2025

Consumers are spending–just not evenly

December 17, 2025

Viewpoint: Trump wants a Europe more like US

December 17, 2025

What’s Good Wednesdays™, December 17, 2025 – holiday movie edition

December 17, 2025

Walmart's $350 billion commitment to American jobs

December 17, 2025

Watch today's livestream: the Top Risks of 2026 with Ian Bremmer

December 16, 2025

Tools and Weapons – In Conversation with Ed Policy

December 16, 2025

Six elections to watch in 2026

December 16, 2025

Trump, loyalty, and the limits of accountability

December 16, 2025

Putin's big holiday party mistake

December 16, 2025

Wikipedia's cofounder says the site crossed a line on Gaza

December 16, 2025

Europe takes control of Ukraine’s future

December 15, 2025

An ally under suspicion

December 15, 2025

In Wikipedia We Trust?

December 15, 2025

Economic Trends Shaping 2026: Trade, AI, Small Business

December 13, 2025

Why we still trust Wikipedia, with cofounder Jimmy Wales

December 13, 2025

Understanding AI in 2025 with Global Stage

December 13, 2025

Can we still trust Wikipedia?

December 12, 2025

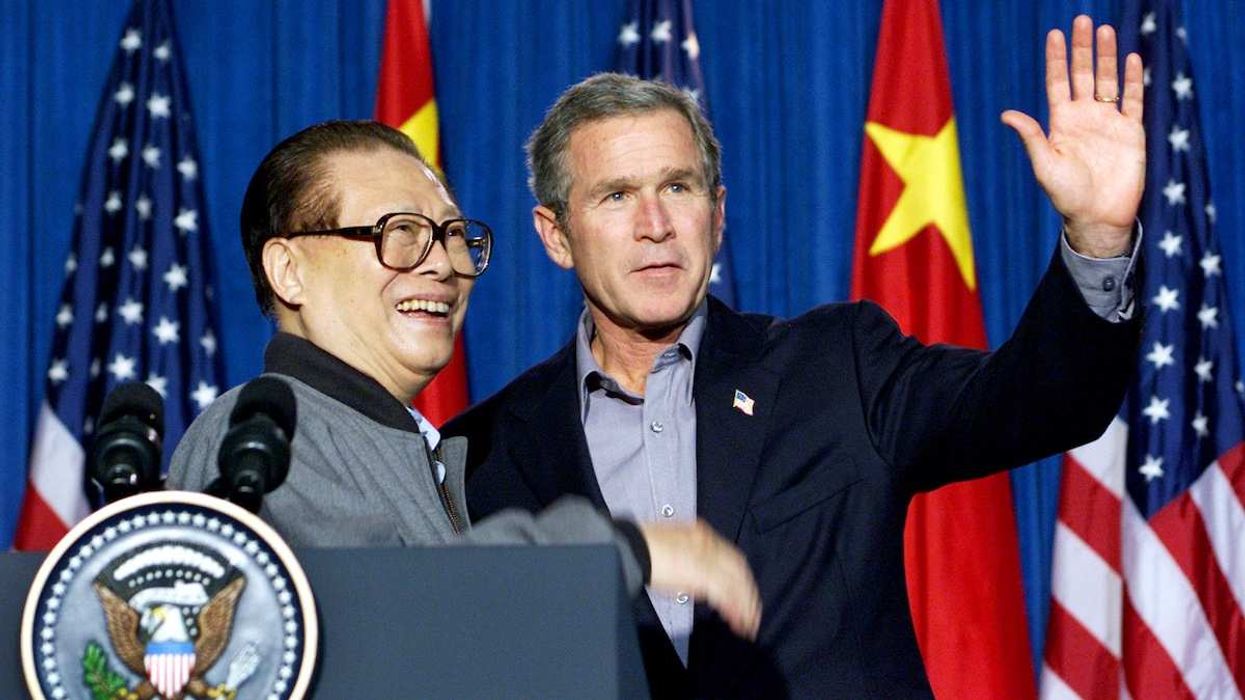

How chads and China shaped our world

December 12, 2025

You vs. the News: A Weekly News Quiz - December 12, 2025

December 12, 2025

Republicans lose on Trump’s home turf again

December 11, 2025

It’s official: Trump wants a weaker European Union

December 10, 2025

The power of sports

December 10, 2025

Japan’s leader has had a tricky start. But the public loves her.

December 10, 2025

What’s Good Wednesdays™, December 10, 2025

December 10, 2025

Walmart's $350 billion commitment to American jobs

December 10, 2025

Tools and Weapons – In Conversation with Ed Policy

December 09, 2025

Honduras awaits election results, but will they be believed?

December 09, 2025

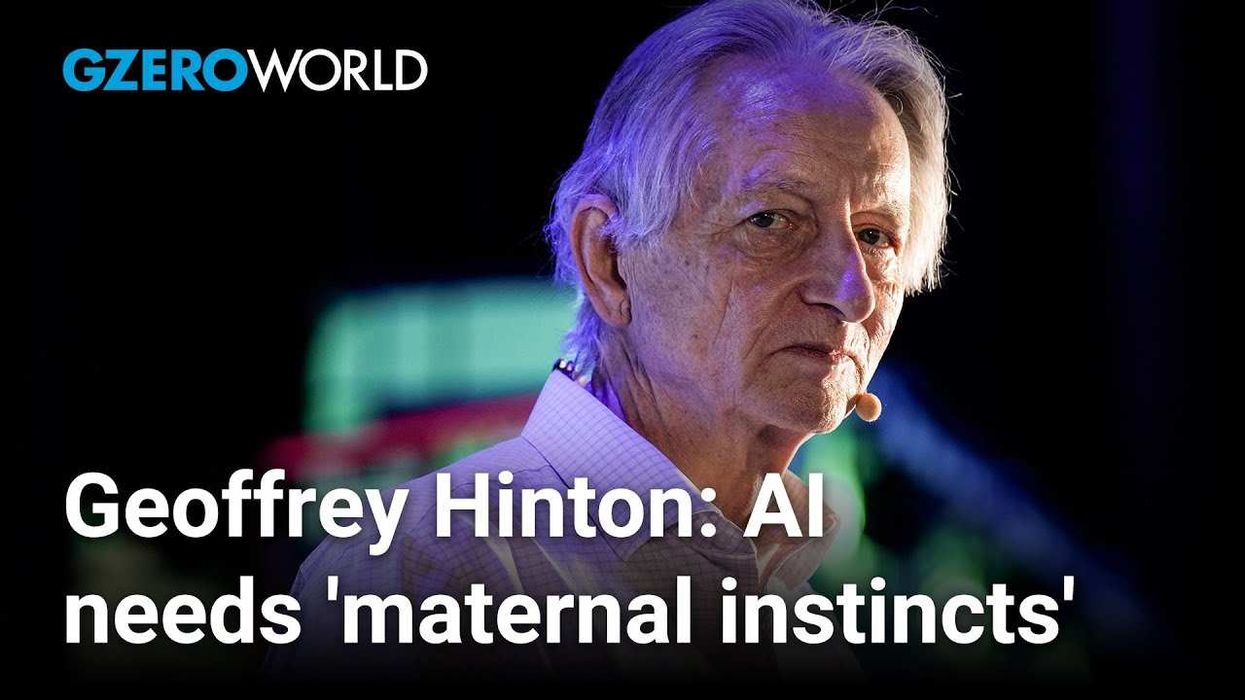

Geoffrey Hinton on how humanity can survive AI

December 09, 2025

Notre Dame, politics, and playing by their own rules

December 08, 2025

GZERO Series

GZERO Daily: our free newsletter about global politics

Keep up with what’s going on around the world - and why it matters.